The Post A.I. Creative Class

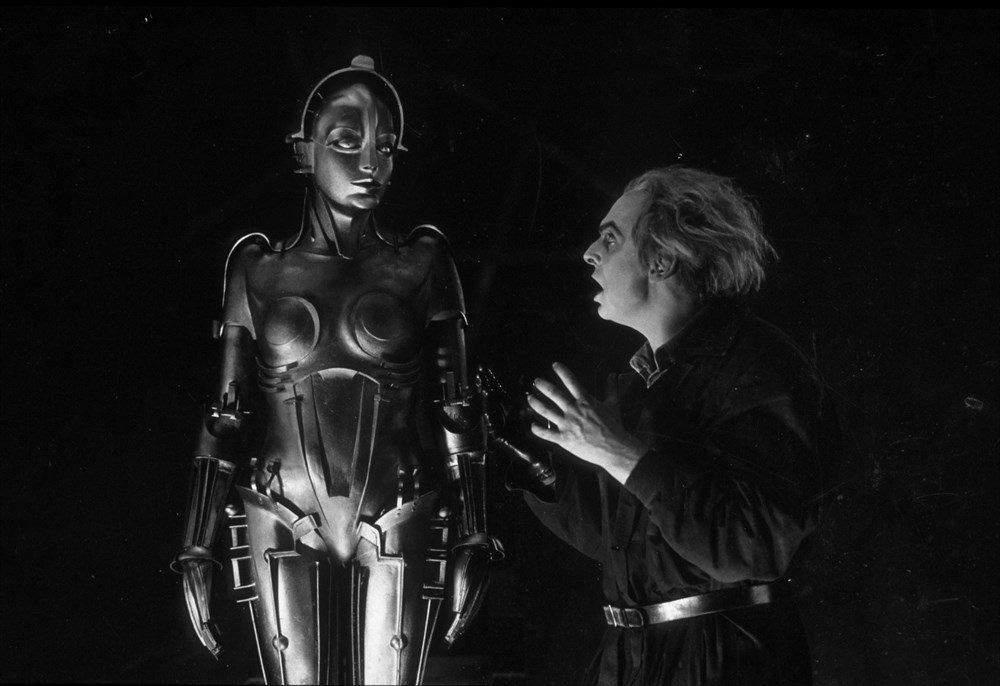

As Large Language Models become more adept at reasoning like humans, who will have leverage and how - in the new world?

The month of December does something in people’s brains where the urge to look back on the year coming to an end and trying to create a highlight reel with all the ups and downs, the small wins and the missed opportunities, is second only to the urge to create a bulleted plan of action to change oneself, in ways that the old you will not recognize the new you in this coming lot of 365 days – goodbye procrastination, take your goals by the horns, finally start on the outline of that novel.

Every December, I do find it strange how the system of months, weeks and, days are simply ways to organize our affairs on a temporal dimension, and that we are not entering some portal on 1st January where we leave our old selves behind, like an exoskeleton and start becoming this new idealized self. Having said that, I will confess I am not special.

So, I go through the motions like everyone else on my Twitter feed and think about improvements I need to make. One that I have set my mind to is to try and consume content – news, media, and opinions with a skeptical lens. I’d think of it as a cognitive capacity where I have not formed an opinion without doing at least a basic level of research and some version of “listening to the other side”, contra points essentially. Of course, besides multiple geo-political conflicts raging in various parts of the world, 2023 was the year of A.I. hype, more specifically Generative A.I. and that new kid on the block that has become the most talked about consumer tech product in recent memory - ChatGPT. So, like most other white-collar employees of big corporations, freelancers, small business owners, dentists, and lawyers, I am trying to learn, critically, about how smart or dumb it is and how anxious (or confident) should we be about our place in the A.I. centric economy.

Anyone who follows the news cycle around this topic can safely conclude – Gen A.I. has been super impressive and also error-prone. Both the competency and usefulness of these technologies have been a mixed bag – in April, GPT-4 passed the bar exam (UBE), scoring the 90th percentile, but also recently A.I. generated deepfake led misinformation was identified to be misleading voters in Bangladeshi elections. Pharma companies are using deep learning models like Generative Adversarial Networks (GANs) to help understand protein folding and synthesis helping faster drug discovery. At the same time, gen AI platforms are being used to create all sorts of creative artifacts from art and literature winning prizes – and raising questions about the semantics of worthiness and depth.

One thing to remember is that the research field of A.I. has a lineage going back to the 50’s when scientists and philosophers started aligning on the need for exploring the potential of A.I. leading up to polymath Alan Turing whose work in the mathematical possibility of artificial intelligence pretty much revolutionized the field. It is only now with the dramatic improvements in sheer compute power and the availability of capital required to run the machines that are used to train the LLMs – that we have started to see results. The use cases will come, in time. It is a powerful enough piece of tech that cannot be ignored. Just like NFTs in the grand scheme of things are less about being a part of a club run by apes, and more about concepts of provenance and ownership and how they will play out in the future, I think the use cases talked most about LLMs in the media are only scratching the surface and the rapid pace of development in this field will shake things up. A lot.

Maestro: ChatGPT Orchestrating Reason

The general understanding of ChatGPT as an advanced chatbot is that OpenAI has fed it the entire Library of Alexandria and the whole of the internet as its pre-training dataset, so when you ask it a question, based on its training and understanding of grammar, context and even reasoning abilities, it will spit out an answer. The underlying GPT model works in a way that it learns the relationships between the words and the context in which they are used and can predict what comes next in a sentence (next token prediction). When put to the test by various enthusiasts on various levels of logical problems, ChatGPT has shown many improvements in understanding complex instructions and data presented to come up with solutions. It sort of ‘builds a world model’ – that considers the nature and definition of the problem as stated along with various dimensions of time and space it is situated in.

It is not unfair to call ChatGPT as a reasoning engine instead of thinking about it as an encyclopedia of all possible human knowledge. The training of the models enhances the effectiveness of this reasoning ability, not how much it knows. Paradoxically, the current limitations in the models' performance stem from their incomplete knowledge rather than their reasoning capabilities. Sam Altman has stated the same in a WSJ interview:

"The future might see AI models requiring less training data, focusing more on their reasoning capabilities."

Nobel Laureate and behavioral psychologist Daniel Kahneman, in his book “Thinking, Fast and Slow” introduced the concepts of System 1 and System 2 types of thinking. System 1 is characterized by an automatic, intuitive type of thinking for when we need to make split-second decisions and ones where we do something without consciously thinking about it – like driving on your daily commute while you ponder the meaning of life. These involve the use of various heuristics or mental shortcuts that enable us to make these quick decisions. System 2 involves a more deliberate mode of thinking used when making decisions that require focused concentration – like mortgage serious. The decision to buy a house, weighing in all the various factors, or working on design problems as an engineer falls under this type. This type of thinking generally leads to better decisions and are less prone to our inherent biases. We usually use both types of thinking to navigate through daily life depending on the situations we encounter.

A recent Nature paper and study exploring system 1 and 2 processes in LLMs showed that “..as the models expand in size and linguistic proficiency they increasingly display human-like intuitive system 1 thinking and associated cognitive errors. This pattern shifts notably with the introduction of ChatGPT models, which tend to respond correctly, avoiding the traps embedded in the tasks.” One inference from this is that ChatGPT performs better than an average human on some simple logical and mathematical problems by avoiding “silly mistakes” that an actual person may be prone to.

The real clincher is the secondary finding – the model at first glance and based on initial CRTs (cognitive reflective tests) looks to be working as a conveyor belt of words. It reads text, predicts the next word, and keeps going. This involves a single wave propagation through the neural net while iterating each predicted token/word, working as it is designed. Additional studies showed that it is also doing system 2 type of thinking under the hood. From the same paper:

“While generating each consecutive word, LLMs re-read their context window, including the task provided by a user, as well as the words they have thus far generated. As a result, LLMs can employ their context window as a form of an external short-term memory to engage in chain-of-thought reasoning, re-examine the starting assumptions, estimate partial solutions or test alternative approaches”.

The bewildering thing is that this is emergent behavior and unintended by the designers of the models! How did this capacity emerge? For more examples of testing ChatGPT on both breadth and depth of reasoning using various types of problems – defined, undefined, or redefined, read this detailed post by Massimo Passamonti. Despite its known weaknesses, ChatGPT represents significant advances compared to previous models.

Examples of practical applications have started making their presence felt – from using it as a research assistant (capable of doing the work of an army of assistants) to how Atlantic writer Derek Thompson is trying out: using it to create a personalized GPT for himself that can read academic papers and summarize the key findings from it. It will be interesting to see, maybe in a year or two years, the productivity gains from a macroeconomic standpoint accrued due to this new tech overall. Of course, the challenge there is that productivity gain is considered to be a lagging indicator taking time to manifest in the economy. Industries and companies adopting these technologies take time to tweak existing management practices and the labor force also needs time to adapt to the new workflows.

Creative Capital and the New Professional Elites

“Escape the 9-5, Live Anywhere, and Join the New Rich” goes the subtitle of the digital nomad era bible, ‘The 4-Hour Workweek” by Tim Ferriss. Depending on what your views are about the concept of running a “lifestyle business” or side-hustle culture running through the veins of millennial America, the book besides giving a framework and tactics for realizing that lifestyle, also answered a question, or in my mind asked the question – can you abandon the deferred-life plan to create a lifestyle where you are economically compensated for creating and selling products and services people need and value while being untethered to a physical office or even a city. For what it’s worth, personally, I like Tim (as the online persona I know of) and enjoy the content he puts out. His podcast invariably has some of the most interesting people across diverse domains.

Richard Florida in his seminal work ‘The Rise of the Creative Class’ tried to identify this new socioeconomic group – the creative class, professionals predominantly in the knowledge and creative industries, who shape the cities and local economies they flock to. Besides Silicon Valley, we have seen the rise of cities like Chiang Mai and Berlin as global destinations for digital nomads. Taking this notion of the creative class and the emergence of LLMs impacting the majority of industries, in 10, 15, or 20 years, we will likely see a re-shaping of the economy and the labor force and what this new form of economy needs from its labor force in the face of rapid automation. I will keep concerns of even larger wealth inequality in a tech platform-led landscape for a separate post, but this current thought experiment is arguing for the utopian case.

Between the time we have massive automation and a large number of jobs disappearing out of the economy and the time when Skynet takes over the world and turns us into paperclips, we may have a sliver of opportunity where we can try out this experiment: encourage, even demand a section of the labor market – these creative class folk, to do their own thing. What will they do? They will use their creative capital to think, ponder, and experiment – and create the futuristic jobs of tomorrow. That is some science fiction there, you’d say? Well, I would remind you, that cryptocurrencies and metaverse were ideas first introduced in sci-fi novels from the likes of Neal Stephenson and William Gibson. Just no more NFT traders, please. We didn’t have jobs like graphic designers, Chief Diversity Officers, or AI girlfriends in the 1950s (someone has to write the code for that obedient companion folks). So, we will need these imaginative people to imagine new futures and new jobs their grandchildren can prosper in.

Paul Graham had a set of tweets that talked about this. We will have a pool of this creative class that will be highly leveraged by virtue of being the creators and IP owners of high-quality content that will be used to train the models. The rest of the populace will be using the fruits of the technologies, using them to communicate better with each other, their customers, and their audience. What about economic capital? If this section is going to create the good stuff outside the give-me-money-for-my-creative-labor economy, what will fulfillment look like for them? Does human nature comply with this kind of existence where mimetic envy can be removed from the equation? Will these people not desire the luxury vacations to Mars and covet those premium tickets in the hyperloop from LA to NYC to see a show in Madison Square Garden?

As Big Tech continues its dominion over the data economy, by trying to own the likeness of movie stars and trying to use copyrighted content for training without any plans to compensate publishers or content creators, the strife will continue between the two sides - corporations deploying these technologies at scale and the workers. I suspect this will not be tenable for a long time, evidenced by recent developments like the latest SAG AFTRA/WGA strikes that rattled Hollywood and this lawsuit by NYT on OpenAI and Microsoft. The law will have to adapt.

In anticipation of this new world, some of the questions that are asked with regular frequency amongst the knowledge worker crowd are – what are the new skills we should be picking up? Some of those may be unimaginable to us now based on how rapidly this space is advancing, but some common-sense ideas are sure to stand the test of time. Some variation of ‘asking better questions’ is one idea. There is quite a bit of alarmism in rapid automation using LLMs will obliterate a lot of jobs from the current market. But when asked about what new jobs has A.I. created since ChatGPT was launched, some people point to a new job that has popped up on job boards – that of a ‘Prompt Engineer’. Who is hiring? – A.I. startups, and companies like Anthropic, that launched ‘Claude’, a chatbot like ChatGPT.

To my mind, this does not pose an exact replacement of tech skills like Python or Photoshop as a core skill, but a skill that can enable someone with existing deep knowledge in an existing domain to become 10x more productive (and creative), as they will be able to prompt the LLMs with better accuracy and with more opportunity of getting unexpected and surprising results. At a very high level, prompt engineering is a fancy term for using language (English) to get an expected output.

We tend to focus a lot on the output in discussions about A.I. There is an equal amount of thought that we should put into the input – that produces the output. The skills we are talking about are timeless - critical thinking, building depth of domain knowledge while also building an inter-disciplinary repertoire of skills and interests that enable you to not only ask the machines good questions but to engagingly reason with them.