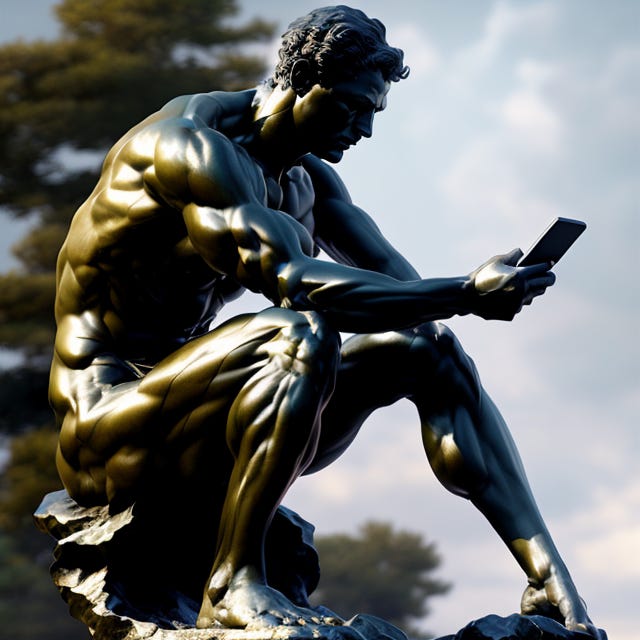

Stable Genius

A.I models in the generative media space have blown up in the past year. This genie is not going back in the bottle.

The last couple of years have seen a plethora of Artificial Intelligence (AI/ML) models create a lot of buzz, especially with the extremely online cognoscenti. When GPT-3, the language model based on deep learning neural networks, was released in an incrementally phased manner by OpenAI, it unleashed the imaginations of experts and hobbyists alike on the use cases where such large language models (LLMs) can be used effectively. These span diverse areas like developing automated chat bots for customer service teams, cutting down the time it takes to write well-converting marketing copy and product descriptions, to translating legacy code bases into simple to understand English. There have been way too many think pieces about the coming wave in AI generated media recently.

As with the evolution of the internet from the 90’s through the 2000’s, where we saw the internet as a medium morph our consumption patterns of text, then audio, then video, in a similar fashion, these large AI models started with GPT-3, trained using humongous amounts of text (and around 175 billion parameters). Naturally, the media next in line to be seized by AI models would be images, or visual media.

Stability AI is one of the companies at the forefront of this hype fueled space. As an alternative to OpenAI’s image generator DALL-E 2, their version – Stable Diffusion received as much bewildered admiration as it attracted controversy. That may be because it is open source – anyone can download it and modify to run their own version to generate media. It is obvious to see why in this current moment, such a move can lead to criticism. With the positive ethos of open source, a key characteristic of large-scale software development and distribution, comes the negative consequences of this model – i.e., the dangers of misuse to create violent imagery including content that can be used as propaganda and disinformation.

Computer vision, the inter disciplinary field of research in Artificial Intelligence that focuses on classifying, extracting and interpreting data from images and videos, in a way mimicking human vision, has had spectacular advances in recent years. Social media behemoths armed with massive amounts of training data has enabled compute at a humongous scale. The challenge has been that these sophisticated models have huge memory spend requirements. This is why as a milestone for broad based access to such high-performance models, the release of Stable Diffusion is impressive. The dual benefits of high-fidelity images generated, and relatively low resource requirements has made its launch much talked about in the tech press.

Under the hood – Stable Diffusion uses an image synthesis model called Latent Diffusion. In brief, latent diffusion models break down the process of making an image into a series of applications of denoising autoencoders. This was described and introduced by a paper published by Ludwig Maximilian University of Munich.

***

Emad Mostaque, a former hedge fund manager who leads Stability AI as CEO, wants to bring the power of machine learning to the masses. His company’s motto reads ‘AI by the people, for the people.’ At a time when disenchantment with big platform companies are at a peak and we are still grappling with the implications of behavior modification driven by the design of their extractive business models, such lofty pronouncements will need to be stress tested. By his own admission, Mostaque was surprised that the applications to get mainstream recognition of such cutting-edge research in model development would be in generating media. In a recent podcast, he goes on to explain –

You shouldn’t underestimate media. The easiest way for us to communicate is doing what we’re doing now. We’re having a chat with our words. The next hardest is writing each other emails or chats. To write a really good one is very hard.

“I made this message long because I could not spare the effort to make it shorter,” I think someone once said.

There is definitely a lot of funding pouring into this area of ML model development as more commercial uses become apparent. In the AI Index Report published by Stanford in 2022, there are couple of takeaways worth noticing. One – the technical performance of these models has grown at a staggering pace. This combined with a reduction in the cost to train image classification systems and massive improvement in the time it takes to train them will likely lead to wider commercial adoption, as the economics become attractive to VCs.

The second is the elevation of AI Ethics from being an academic specialty to a more serious industry and society wide concern. The number of publications, research papers and conferences focused on AI ethics have seen double digit growth in last few years. Algorithmic fairness, or the lack thereof in these technologies available to the public are raising vital questions around changing notions of authorship and impact on creative professionals and artists. The entire magic you see on the screen after you enter a text prompt is enabled behind the scenes by training models using vast sets of images and metadata scraped from the internet. Think big social platforms ranging from Pinterest and Tumblr to stock image sites like Getty Images and many more. A lot of the reference images and media are created by living artists and hence, it raises questions about ethics of appropriating their creative blood and sweat and outright using their work with an absolute absence of any form of consent. Even if today the usage of scraped data falls fairly under the current provisions of copyright and other laws, it begs the question – is the law too slow to define and codify the guardrails for such fast-evolving technologies?

Mostaque is hopeful on some of these concerns citing that development of new verification and attribution systems along with building and releasing these models in the public sphere with open access is the ideal way forward. Referring to his aversion to big tech controlling the development of these technologies, he quipped:

Should the tools to allow anyone to be creative, anyone to be educated, and other things like that be run by private companies? Probably not. They should be a public good. Should they be United Nations and other bureaucratic hell holes? Probably not.

The jury will be out for the foreseeable future on Mostaque’s outlook on how these will play out, as they do when a new-fangled technology starts sowing roots into digital culture and media consciousness. The implications are only just beginning to be explored. This looks like the basic blocks for building the primitives that may someday realize the cyber fantasies of conjuring whole worlds in the holodeck.